From Polarisation to Dialogue: Developing Value-Aware AI for Social Media

Integrating moral awareness into intelligent systems to shape healthier digital communication environments.

Understanding Human Values in Social Media with AI

Polarisation is a growing challenge in social media environments, often manifesting through hostility toward opposing groups. Research shows that content expressing strong emotions and moral values can trigger such adversarial dynamics. To design interventions that promote more constructive dialogue, it is essential to understand the diversity of viewpoints that emerge in public debates and to identify morally charged content that might escalate tensions.

In VALAWAI, we are developing tools that enable artificial intelligence systems to detect and interpret moral values in social media content. These tools are integrated within a broader architecture designed to analyse public debates and to inform interventions, such as value-aware recommendation systems, that encourage prosocial online interactions.

Mapping the Moral Landscape of Public Debate

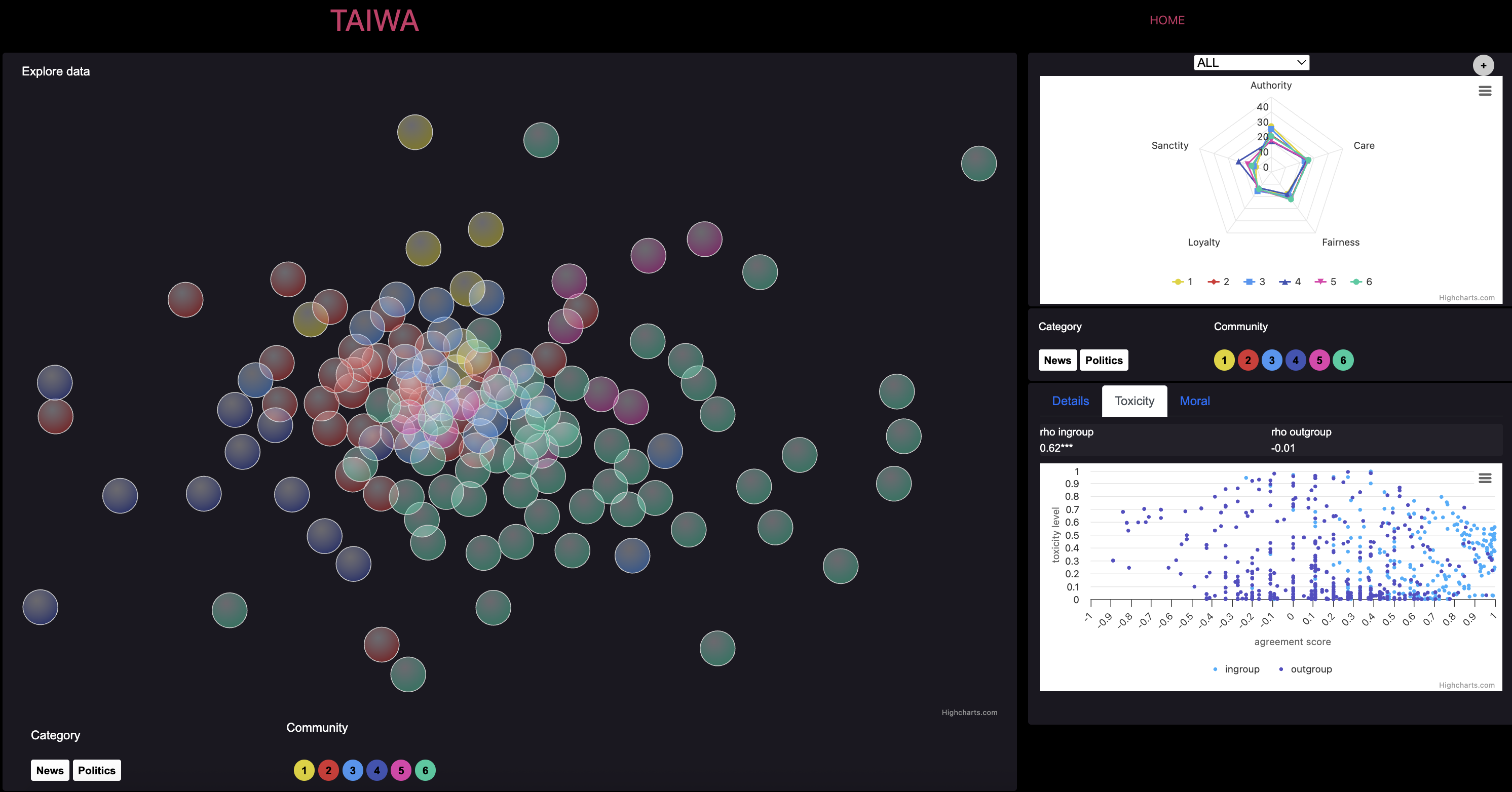

Our first milestone focuses on demonstrating the potential of these systems by analysing the entire public debate of a European country over several years. By examining the online activity of influential actors (including media outlets and political organisations) we created TAIWA, a platform that offers a large-scale view of how moral and emotional dimensions shape the discourse on Twitter in Italy. This analysis provides new insights into how information flows and polarisation evolve within digital ecosystems.

Towards Value-Aware Recommendations for Social Dialogue

Building on these insights and through engagement with communication authorities, journalistic organisations, and social science researchers, we are developing new algorithms for content recommendation. These algorithms aim to suggest out-group information (i.e. content from different perspectives) in ways that minimise the risk of toxic reactions while maintaining high engagement. The ultimate goal is to highlight the content that can foster healthy conversation across users with different viewpoints, helping bridge opposing communities and support online engagement that is actually social.

Results

Online news ecosystem dynamics: supply, demand, diffusion, and the role of disinformation

Moral values in social media for disinformation and hate

If you are an editor, a publisher, or are interested in creating a safer and more ethical media environment, please contact:

Lissette Lemus